OpenAI Mac App introduces Screen Reading Capability: A Game-Changer for Productivity

The OpenAI Mac app has just rolled out a groundbreaking feature that enhances its productivity:

The ability to read content from the screens of other Mac apps.

Whether you’re juggling research, handling complex workflows, or managing data across multiple tools, this new capability is designed to simplify your tasks and supercharge your productivity.

In this blog post, we’ll explore what this feature is, how it works, and the exciting ways you can use it in your day-to-day life.

What is Screen Reading in the OpenAI Mac App?

Screen reading in the OpenAI Mac app allows you to select a portion of your screen displaying content from other apps—be it text, tables, code, or visuals—and let the app interpret and act on it. By combining the power of OpenAI’s language models with this innovative functionality, you can now process, summarize, or interact with screen data effortlessly.

What you can do with it:

1. Summarizing Complex Texts: Highlight long reports, articles, or emails, and get concise summaries instantly.

2. Understanding Code: Capture code snippets and have them explained or debugged right within the app.

3. Extracting Data from Tables: Select spreadsheets or tables, and convert them into usable formats or actionable insights.

4. Translating Content: Instantly translate foreign-language text displayed in any app into your preferred language.

How Does It Work?

Note: This functionality is currently in early beta and available to ChatGPT Plus and Team users. OpenAI plans to broaden access and support for more applications over time.

1. Enable Screen Access:

Upon first use, you’ll be prompted to grant the OpenAI Mac app permission to access your screen. This is a standard macOS request for apps that need to interact with the display.

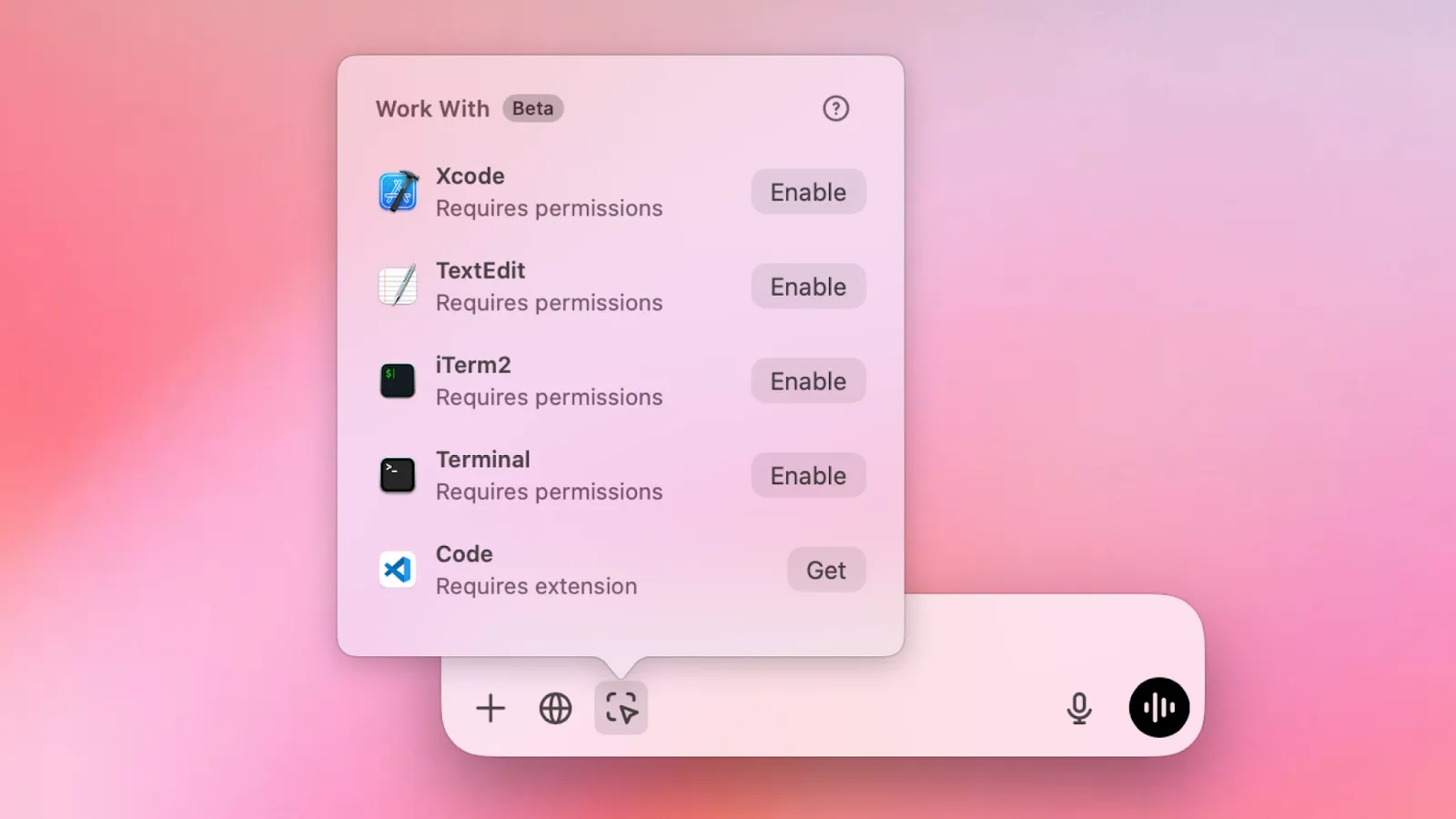

2. Select an app to read

Use the app’s “Screen Reader” tool to drag and select the portion of your screen you want it to process.

3. Perform Actions:

Once the selection is made, the app provides a context-aware menu of actions. For instance:

• Extracting and summarising text.

• Offering insights or suggestions based on the content.

• Generating a response, code, or action plan.

Limitations

In order to read different apps, OpenAI is mostly relying on the macOS accessibility API to read text and translate it to ChatGPT, according to OpenAI desktop product lead Alexander Embiricos. That means it will not works for all apps. For example for VSCode a special extension is required to get things up an running.

Whats next?

This move toward agent-like functionality is particularly significant in light of reports that OpenAI is developing a general-purpose AI agent, codenamed “Operator.” According to Bloomberg, Operator is slated for release in early 2025 and aims to compete with other emerging general-purpose AI agents, such as Anthropic’s desktop-focused solution and Google’s rumored “Jarvis” agent.

OpenAI is rolling out these new capabilities on macOS first, ahead of Apple’s planned ChatGPT integration in December. However, it remains unclear when the “Work with Apps” feature will be available on Windows—despite the platform being owned by OpenAI’s largest investor, Microsoft.